Table of Contents

Antialiasing

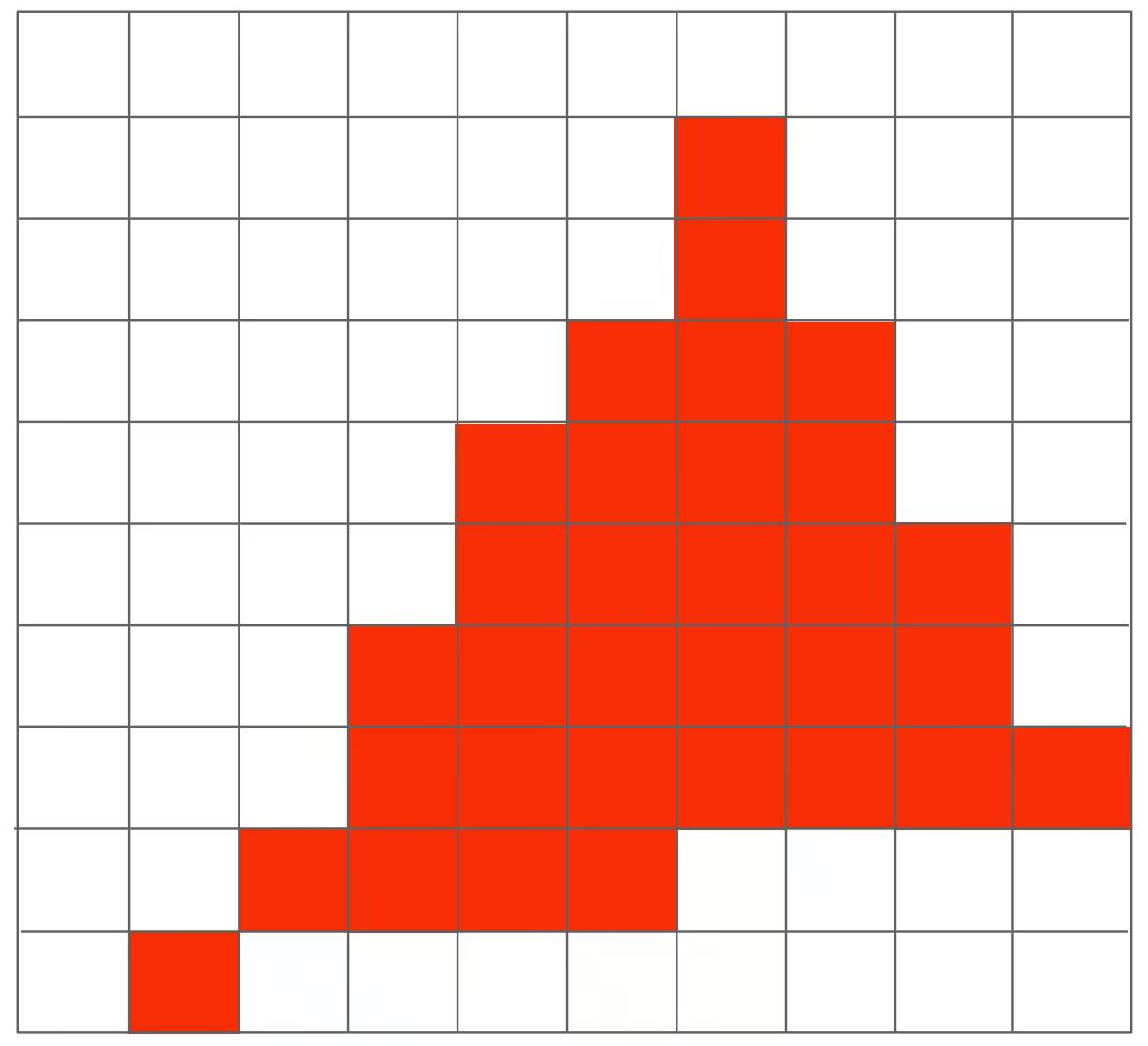

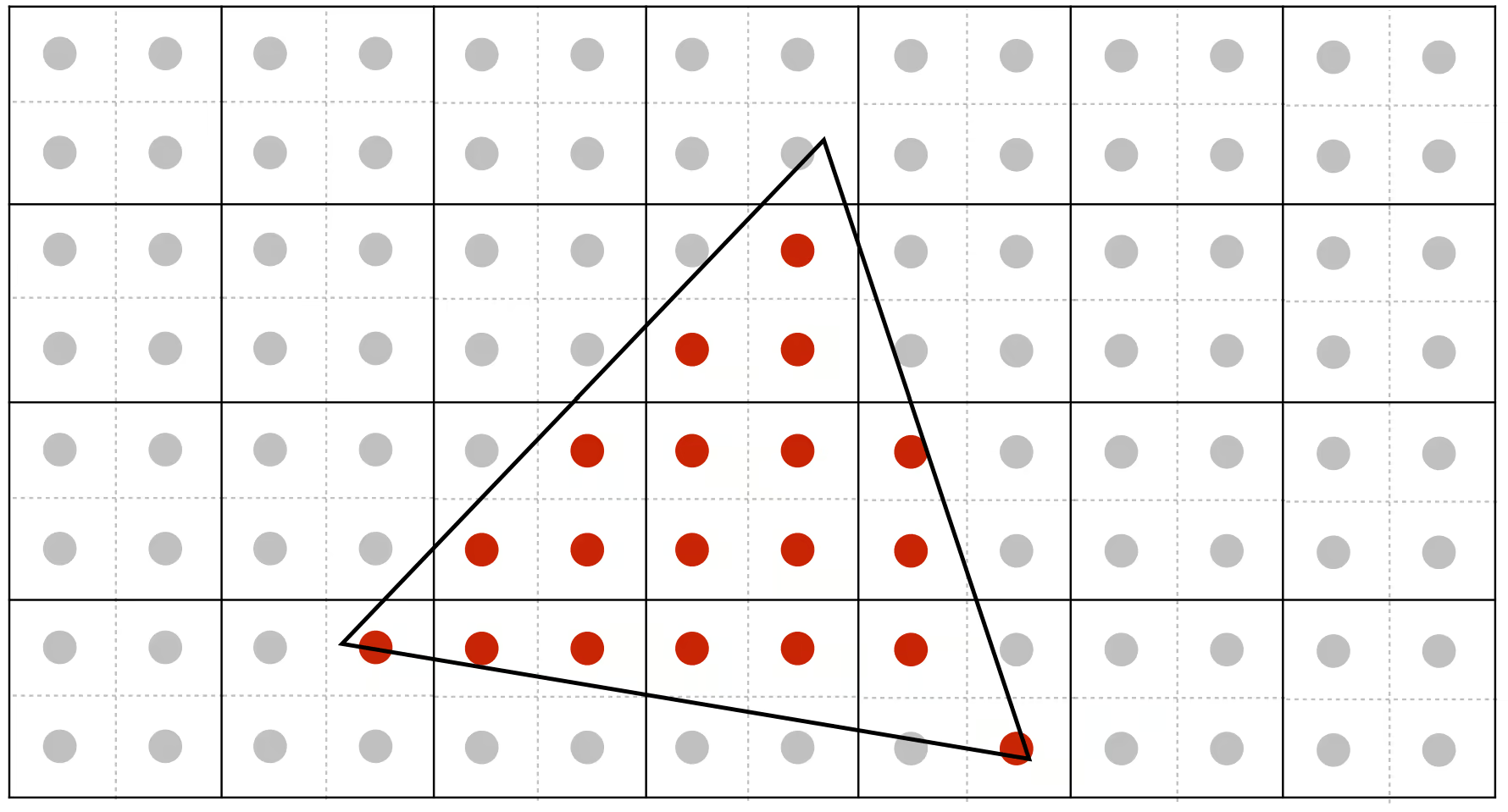

Using the centre of a pixel to determine if the pixel should be rendered can cause aliasing.

Some artifacts include:

- Jaggies (artifacts caused by sampling in space)

- Moiré (artifacts resulting from down-sampling)

- Wagon wheel effect (artifacts due to sampling in time)

These artifacts occur when the sampling rate is lower than the actual rate.

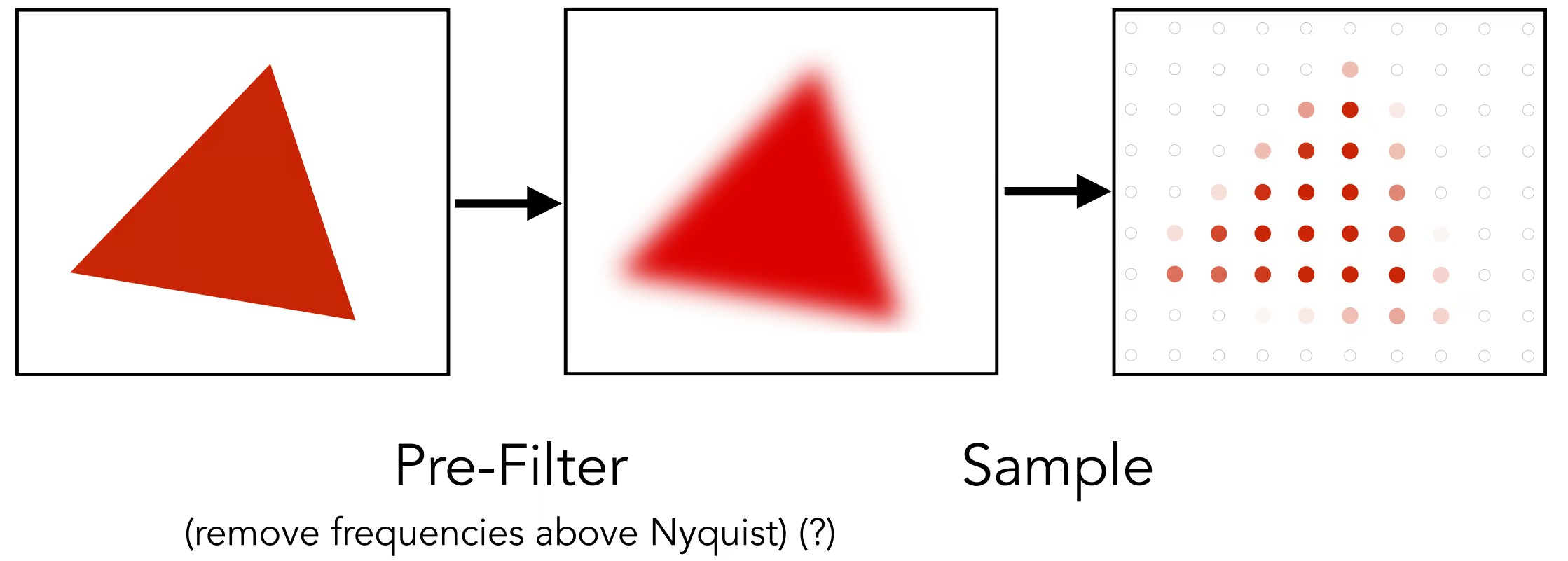

Pre-Filtering

To suppress the high-frequency signal, use a low-pass filter:

But why does pre-filtering then sampling work, whereas sampling then blurring to reduce aliasing does not?

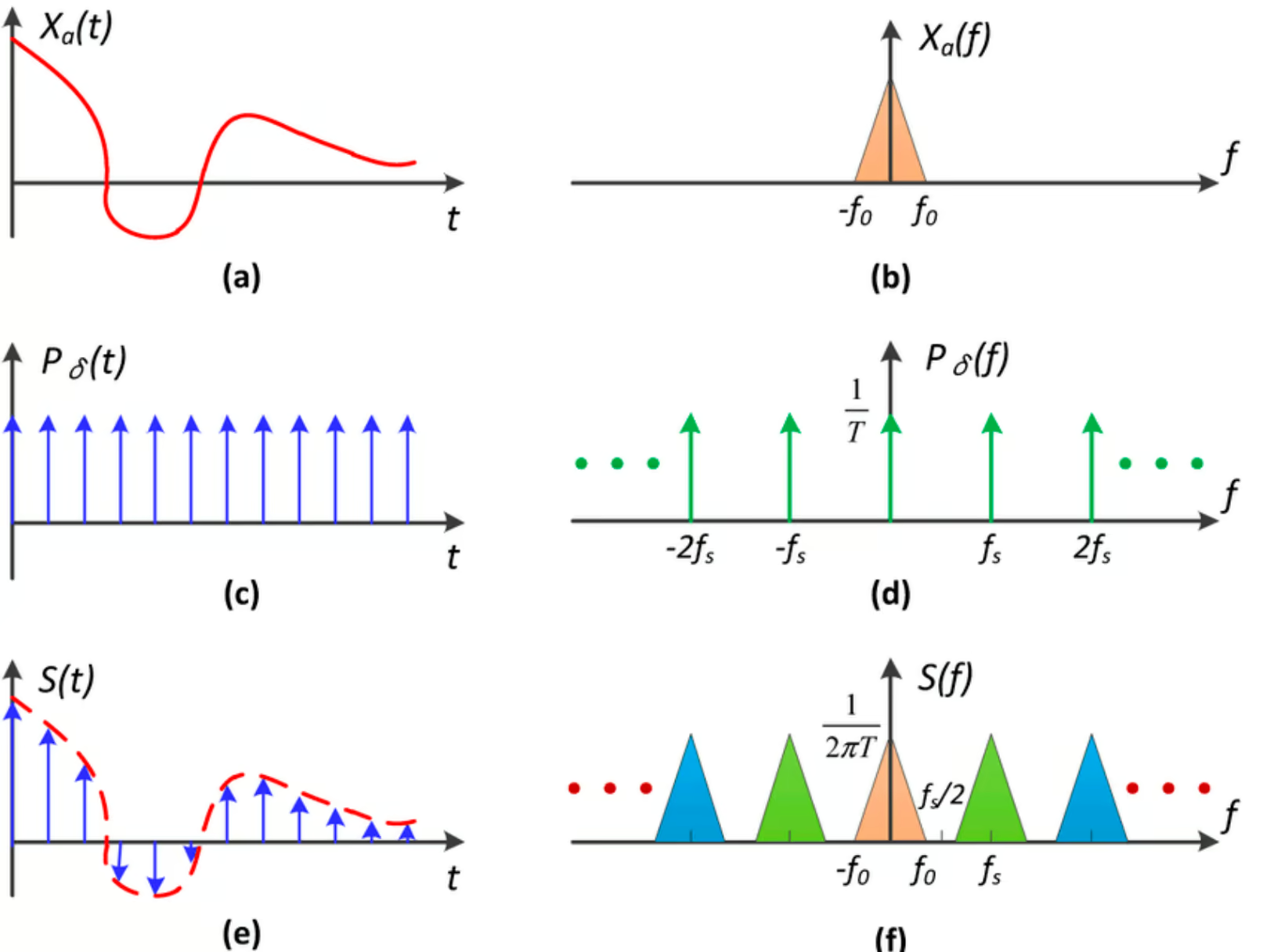

Fourier Transform

By employing the Fourier transform, we can convert a function from the spatial domain to the frequency domain and vice versa:

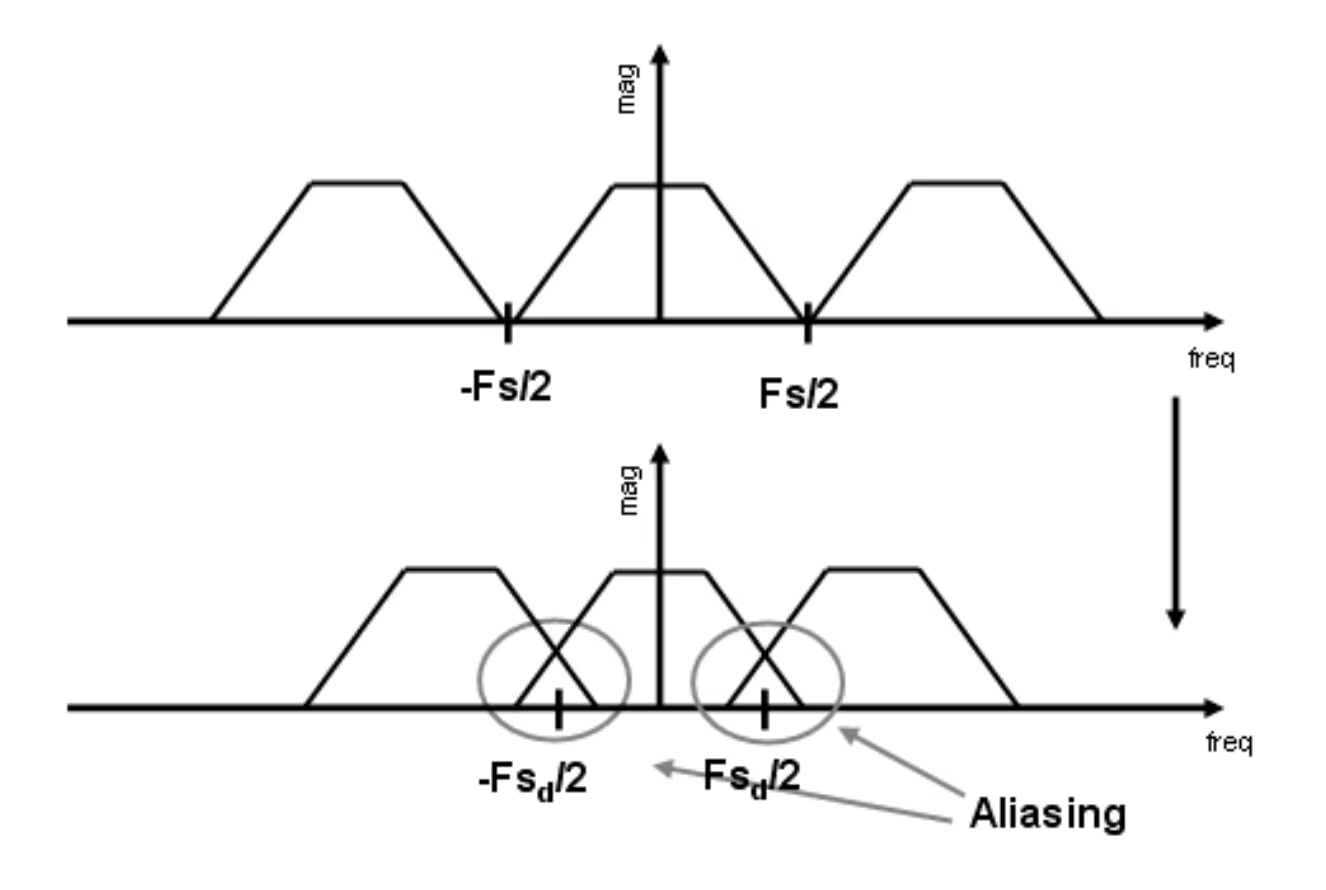

A low-frequency sampling will lose information from a high-frequency signal.

Filtering involves using a filtering kernel for convolution in the spatial domain or multiplying the filtering kernel in the frequency domain.

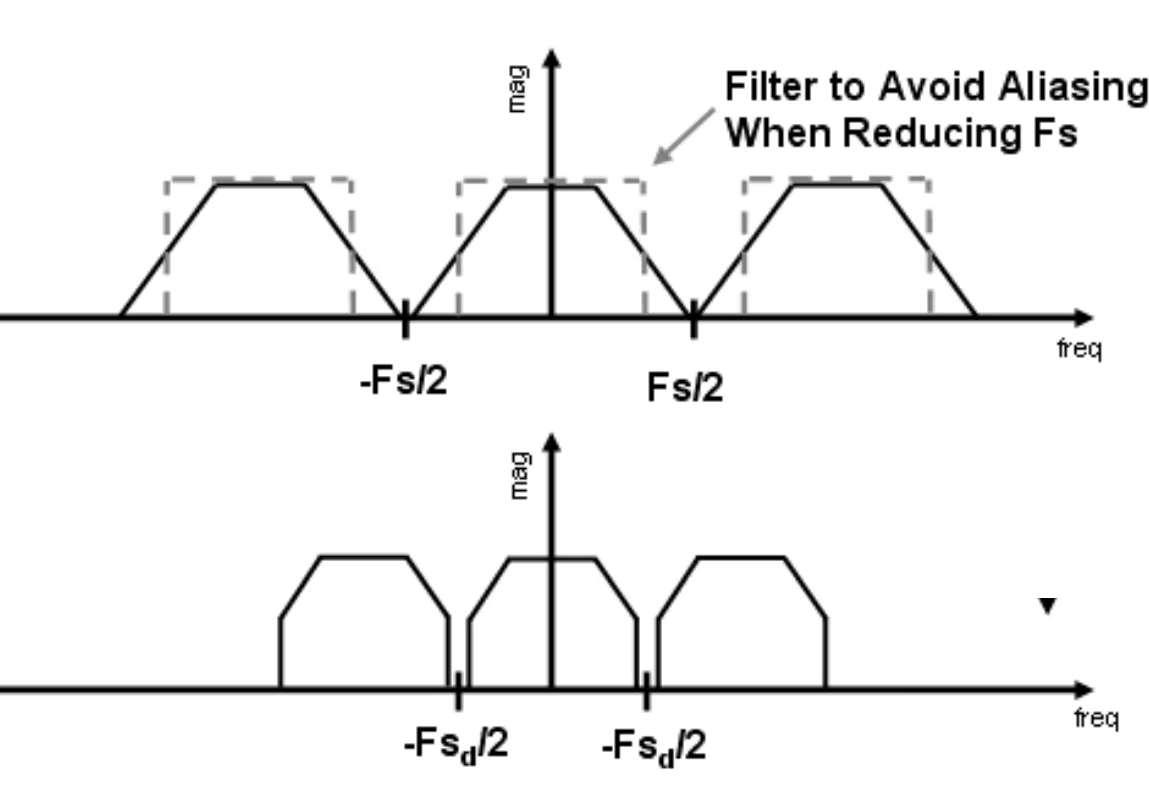

Sampling is essentially multiplying by a single-frequency pulse function. This process repeats the original frequency content within a limited frequency range (sampling frequency).

Therefore, a lower sampling frequency will cause signal aliasing. A low-pass filter can remove the high-frequency aliasing part to improve the quality of the image.

Thus, our filter should be a 1-pixel width low-pass filter to precisely limit the frequency to the image frequency.

Multi-Sample Anti-Alias (MSAA)

Antialiasing through super sampling involves super sampling each pixel (like 2x2) then calculating its 2x2 width low-pass filtered result to use as the color of the pixel.

However, this method is very costly, with the calculation being times more intensive.

There are also other sampling strategies, such as sharing sampling points among pixels or across the temporal domain.

Similar strategies are employed to handle super-resolution, even with Deep Learning algorithms.

Z-Buffering

Painter's Algorithm: Paint from back to front, overwrite in the framebuffer. It takes to sort in depth.

Z-buffering, is pixel-wise painter's algorithm.

- Store current min. z-value for each pixel.

- Use a buffer to store depth value

- frame buffer: color value

- depth buffer: depth value

For depth, we can use absolute value of z. (smaller depth is closer, larger depth is further).

fn render(&self, frame_buffer: &mut FrameBuffer) {

for x in 0..frame_buffer.width {

for y in 0..frame_buffer.height {

if self.depth < frame_buffer.pixels[x][y].depth {

frame_buffer.pixels[x][y].color = self.color;

frame_buffer.pixels[x][y].depth = self.depth;

}

}

}

}

The z-buffer should be initialized to f32::INFINITY. Then during rendering, if current pixel is not occluded, update color and depth of frame.

In this way, we are only finding the minimum value of z-index. The complexity is and the calculation is order-free.