Table of Contents

Goal

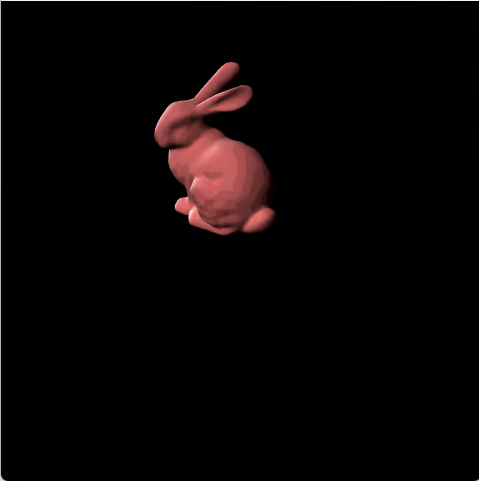

Now, we shall load a group of triangles to form an object. We shall then implement a simple Blinn-Phong reflection model to shade the object.

Texture

First, we need to load the object from a file. In our project, we are limited to using only vertices and faces to define the object. We will start by creating a new crate called Model. Within this crate, we will load the model file and the texture image to initialize faces and texture.

pub struct Model {

pub faces: Vec<Triangle>,

pub texture: Texture,

}

for line in reader.lines() {

let line = line.unwrap();

let tokens: Vec<&str> = line.split_whitespace().collect();

match tokens.first() {

Some(&"v") => {

let x: f32 = tokens[1].parse().unwrap();

let y: f32 = tokens[2].parse().unwrap();

let z: f32 = tokens[3].parse().unwrap();

vertices.push(Vec4::new(x, y, z, 1.0));

}

Some(&"f") => {

let idxs: Vec<usize> = tokens[1..4]

.iter()

.map(|&i| i.parse::<usize>().unwrap() - 1)

.collect();

let triangle_vertices: Vec<Vec4> = idxs.iter().map(|&i| vertices[i]).collect();

let a: Vec3 = triangle_vertices[0].xyz();

let b: Vec3 = triangle_vertices[1].xyz();

let c: Vec3 = triangle_vertices[2].xyz();

// Compute the normal of the triangle using the cross product

let ab: Vec3 = b - a;

let ac: Vec3 = c - a;

let norm: Vec3 = normalize(&cross(&ab, &ac));

let norm: Vec4 = Vec4::new(norm.x,norm.y,norm.z,0f32);

// Store the normal for each vertex of the triangle

for &idx in &idxs {

vertex_normals

.entry(idx)

.or_insert_with(Vec::new)

.push(norm);

}

triangles.push(Triangle {

vertices: triangle_vertices,

normals: vec![norm; 3], // Temporary: will replace with averaged normals

colors: vec![[0, 0, 0, 255],[0, 0, 0, 255],[0, 0, 0, 255]],

});

}

_ => continue, // Ignore other lines

}

}

Here, we load the object file, using "v" for vertices and "f" for faces. We first store the vertices in a vector. For the faces, we calculate the normal vector of each face using the cross product. After all faces are loaded, we compute the average to obtain the normal vector for each vertex.

For simplicity, we use a solid color for the texture. In the texture struct, we can define the following parameters:

- UV image information

- Base color (derived from the UV image)

- Ambient coefficient: Typically, this is determined by the smoothness of the texture material.

- Specular coefficient: This also depends on the material's smoothness.

However, this texture is only used for the Blinn-Phong model, so it is significantly simplified.

In the texture struct, we will implement our get_color function.

pub fn get_color(&self, light: &Light, spot: &Vec4, normal: &Vec4, camera: &Vec4) -> [u8; 4] {

// distance

let light_vec: Vec4 = spot - light.loc;

let view_vec: Vec4 = spot - camera;

let distance =

light_vec.x * light_vec.x + light_vec.y * light_vec.y + light_vec.z * light_vec.z;

let light_vec: Vec4 = light_vec.xyz().normalize().insert_row(3, 0f32);

let view_vec: Vec4 = view_vec.xyz().normalize().insert_row(3, 0f32);

let half_vec: Vec4 = view_vec + light_vec;

let half_vec: Vec4 = half_vec.xyz().normalize().insert_row(3, 0f32);

let nh = normal.dot(&half_vec);

let nl = normal.dot(&light_vec);

let intensity: Vec3 = light.intensity / distance;

// ambient light

let specular_light: Vec3 = intensity * {

if nh > 0f32 {

nh.powf(4f32)

} else {

0f32

}

};

// reflection light

let reflection_light: Vec3 = intensity * {

if nl > 0f32 {

nl

} else {

0f32

}

};

// color

let ambient_color: Vec3 = light.ambient.component_mul(&self.ambient);

let refection_color: Vec3 = reflection_light.component_mul(&self.base_color);

let specular_color: Vec3 = specular_light.component_mul(&self.specular);

let combined_color = refection_color + ambient_color + specular_color;

return [

(combined_color.x * 255f32).clamp(0f32, 255f32) as u8,

(combined_color.y * 255f32).clamp(0f32, 255f32) as u8,

(combined_color.z * 255f32).clamp(0f32, 255f32) as u8,

255u8,

];

}

In this function, we input a light source (including its location, intensity, and ambient light), the color spot, its normal vector, and our camera position.

We then apply the following equation: Our coefficients , , and are normalized. Therefore, if the light intensity is too high, the object may lose its base_color and become overly bright.

Pipeline

Now, we have everything we need. The remaining task is to modify our pipeline to incorporate the get_color function into the rasterizer we created earlier.

Here, we can employ two different methods: vertex shader and fragment shader.

Vertices Shader

In the vertex shader, we can shade our object as soon as we apply the model transformation and view transformation.

for index in 0..3 {

let vertex = &self.vertices[index];

let vertex_mv = mv_matrix * vertex;

let vertex_proj = project * vertex_mv;

let vertex_view = viewport * vertex_proj;

let transformed_vertex = vertex_view / vertex_view.w;

let normal = normal_matrix * self.normals[index];

let transformed_normal = normal.xyz().normalize().insert_row(3, 0f32);

let mut color_f32 = [0f32, 0f32, 0f32, 255f32];

for light in lights {

let color = texture.get_color(light, &vertex_mv, &transformed_normal, camera);

for i in 0..3 {

color_f32[i] += color[i] as f32;

}

}

transformed_triangle.colors[index] = [

color_f32[0].clamp(0f32, 255f32).round() as u8,

color_f32[1].clamp(0f32, 255f32).round() as u8,

color_f32[2].clamp(0f32, 255f32).round() as u8,

255u8

];

transformed_triangle.vertices[index] = transformed_vertex;

transformed_triangle.normals[index] = transformed_normal;

}

Here, during the MVP (Model-View-Projection) and viewport transformations, we can calculate the color for each vertex.

It's important to note that the normal vector should be transformed by the inverse transpose of the model-view matrix

The rationale behind this transformation is that after an affine transformation, the tangent to the surface remains parallel, allowing the normal vector to be accurately recalculated.

Fragment Shader

In fragment shader, we need to find the color for each pixel (or subpixel). So we need to calculate the color in viewport space.

if triangle.is_point_inside(&p) {

// inside triangle, then check depth

let (u, v, w) = triangle.barycentric_coordinates(&p);

let p_p:Vec4 = u * triangle.vertices[0] + v * triangle.vertices[1] + w * triangle.vertices[2];

let p_view:Vec4 = inv_projection * inv_viewport *p_p;

let p_view:Vec4 = p_view / p_view.w;

let normal:Vec4= u * triangle.normals[0] + v * triangle.normals[1] + w * triangle.normals[2];

let z = triangle.interp_depth(u, v, w);

if z > samples_z[sample_index] {

// z-test for subpixel

sample_colors[sample_index] = {

let mut color_f32 = [0f32, 0f32, 0f32, 255f32];

for light in &self.lights {

let color = model.texture.get_color(light, &p_view, &normal, &self.camera);

color_f32[0] += color[0] as f32;

color_f32[1] += color[1] as f32;

color_f32[2] += color[2] as f32;

}

[(color_f32[0]).clamp(0f32, 255f32).round() as u8,

(color_f32[1]).clamp(0f32, 255f32).round() as u8,

(color_f32[2]).clamp(0f32, 255f32).round() as u8,

255u8].to_vec()

};

// update depth

samples_z[sample_index] = z;

}

};

In supersampling, each pixel in screen space is tested to determine if it lies within the triangle. If it does, barycentric coordinates are used to determine the pixel's position in viewport space. Subsequently, the inverse viewport and inverse projection transformations are applied to revert to view space (where our normal vector resides). Finally, the get_color function is used to calculate the fragment color.

Results