Table of Contents

- Scanner

- A: Stair, Peak, or Neither

- B: Upscaling

- C: Clock Conversion

- D: Product of Binary Decimals

- E: 0, 1, 2, Tree?

- G: Shuffling Songs

Scanner

Given that we're utilizing Rust, it's essential we adopt a type-safe scanner. While this scanner may operate slowly, it's a decision we're willing to accept.

fn main() {

let stdin = io::stdin();

let stdout = io::stdout();

let input = Scanner::from(stdin.lock());

let writer = io::BufWriter::new(stdout.lock());

solve(input, writer);

}

pub struct Scanner<B> {

reader: B,

buffer: Vec<String>,

}

impl<R: BufRead> Scanner<R> {

pub fn token<T: std::str::FromStr>(&mut self) -> T {

loop {

if let Some(token) = self.buffer.pop() {

return token.parse().ok().expect("Failed parse");

}

let mut input = String::new();

self.reader.read_line(&mut input).expect("Failed read");

self.buffer = input.split_whitespace().rev().map(String::from).collect();

}

}

}

impl<R: BufRead> From<R> for Scanner<R> {

fn from(reader: R) -> Self {

Self {

reader,

buffer: Vec::new(),

}

}

}

A: Stair, Peak, or Neither

This question is simply to test your template. Compare the three inputs and decide what to print.

fn solve<R: BufRead, W: Write>(mut input: Scanner<R>, mut w: W) {

let test_case_num: usize = input.token();

for _ in 0..test_case_num {

let (a, b, c):(u8,u8,u8) = (input.token(), input.token(), input.token());

if (a < b && b < c) {

writeln!(w, "STAIR");

} else if (a < b && b > c) {

writeln!(w, "PEAK");

} else {

writeln!(w, "NONE");

}

}

}

B: Upscaling

This question requires printing in a specific order, based on oddness as the criterion. However, it's important to properly handle newline characters (\n).

fn solve<R: BufRead, W: Write>(mut input: Scanner<R>, mut w: W) {

let c: usize = input.token();

for _ in 0..c {

let n: usize = input.token();

let s1: String = (0..n).map(|i| if i % 2 == 0 { "##" } else { ".." }).collect();

let s2: String = (0..n).map(|i| if i % 2 == 0 { ".." } else { "##" }).collect();

let mut ans = String::with_capacity(n * 4 * (n + 1));

for i in 0..n {

let pattern = if i % 2 == 0 { &s1 } else { &s2 };

ans.push_str(pattern);

ans.push('\n');

ans.push_str(pattern);

ans.push('\n');

}

ans.pop(); // remove last '\n'

writeln!(w,"{}",ans);

}

}

C: Clock Conversion

This task involves converting time from a 24-hour format to a 12-hour format. One critical detail to consider is that 00:00 should be converted to 12:00 AM.

fn solve<R: BufRead, W: Write>(mut input: Scanner<R>, mut w: W) {

let c: usize = input.token();

for _ in 0..c {

let t: String = input.token();

let hours_str = &t[0..2];

let mut hours: usize = hours_str.parse().unwrap();

let period = if hours >= 12 { " PM" } else { " AM" };

if hours > 12 {

hours -= 12;

} else if hours == 0 {

hours = 12; // midnight

}

writeln!(w,"{:02}{}{}",hours,&t[2..5],period);

}

}

D: Product of Binary Decimals

This problem begins to present some challenges. We need to determine if a number can be completely factorized by "binary decimals," which are decimals consisting solely of 0s or 1s.

Attempting to factorize each number could prove quite difficult, not to mention the need to verify if the factors are binary decimals.

Therefore, an alternative approach would be to pre-generate all binary decimals up to . Then, we can attempt to factorize the number using this set of binary decimals.

fn solve<R: BufRead, W: Write>(mut input: Scanner<R>, mut w: W) {

let c: usize = input.token();

// generate binary number list

let mut binary_decimals =Vec::new();

generate_binary_decimal(100000,1,&mut binary_decimals);

for _ in 0..c {

let n: u32 = input.token();

// test this number greedy

if decompose_with_binary_decimal(n, &binary_decimals,0) {

writeln!(w,"{}","YES");

}

else{

writeln!(w,"{}","NO");

}

}

}

fn generate_binary_decimal(max: u32, current: u32, results: &mut Vec<u32>) {

if current < max {

results.push(current);

generate_binary_decimal(max, current * 10, results); // add zero

generate_binary_decimal(max, current * 10 + 1, results); // add 1

}

}

fn decompose_with_binary_decimal(number: u32, binary_decimals: &[u32]) -> bool {

if number == 1 {

return true;

}

for &num in binary_decimals.iter() {

// greedy, skip large

if num > number || num == 1 {

continue; // Skip numbers larger than the current limit

}

if number % num == 0 {

let quotient = number / num;

// Try to decompose the quotient further

if decompose_with_binary_decimal(quotient, binary_decimals) {

return true; // Found a valid decomposition

}

}

}

false // Cannot be decomposed by the binary_decimals

}

The solution consists of two parts. The first part involves generating binary decimals. Starting from 1, we can recursively append a 0 or 1 at the end until we reach the maximum limit.

The second part involves decomposing the given number. We can exclude 1 and any binary decimals that are larger than 1/10th of the given number, since 1 is always a factor and the second smallest factor would be 10. After finding a quotient, we can recursively decompose the quotients.

E: 0, 1, 2, Tree?

This problem, though tagged "brute force" and "bitmasks," can indeed be solved in time complexity using a simple mathematical formula.

First, let's consider the functionality of the tree nodes. Node a generates branches for further leaves: it consumes one branch and produces two. Node b merely extends existing branches by consuming and producing one branch each. Node c, on the other hand, terminates a branch, consuming one without providing any, thereby making the branch unavailable for further extension. Therefore, to ensure a functioning tree, the balance between a and c is crucial.

For the tree to achieve minimum height, maximizing the number of branches initially is essential. Hence, the nodes should be placed in the order from a to c, starting with node a if possible. After the first a node, which consumes nothing but provides two branches, the number of c nodes should be exactly a-1 to ensure all nodes are properly utilized.

To calculate the minimum height of the tree with a given number of a nodes, we find the smallest such that . This corresponds to the binary width of . In Rust, for a a of type u32, this can be calculated using u32::BITS - a.leading_zeros().

The last layer may not be completely filled, necessitating the use of b or c nodes to fill the gaps. The number of nodes needed to fill the last layer is (1 << height) - 1 -a that is .

If there are insufficient b nodes to fill the last layer, c nodes are used, and the height remains unchanged. If there are remaining b nodes after filling the layer, these extra b nodes can increase the height by , where the floor function denotes rounding down to the nearest whole number.

fn solve<R: BufRead, W: Write>(mut input: Scanner<R>, mut w: W) {

let c: usize = input.token();

for _ in 0..c {

let (a, mut b, c): (u32, u32, u32) = (input.token(), input.token(), input.token());

if c != a + 1 {

writeln!(w, "-1");

} else {

// current layer is binary bits

let mut layer = u32::BITS - a.leading_zeros();

// there are unfilled layer : 2^n - 1 - a

// fill < a

// let fill = 1u32.rotate_left(layer) - 1 - a;

let fill = (1 << layer) - 1 - a;

b = b.saturating_sub(fill);

if b > 0 {

layer += ((b - 1) / c) + 1;

}

writeln!(w, "{}", layer);

}

}

}

G: Shuffling Songs

I suspect I misunderstood this question because my answer turned out to be the slowest... and the lengthiest.

Regardless, let's delve into my thought process for this problem.

In my approach, the task is to construct an undirected graph without loops and to analyse this graph to identify the longest path.

Fist Submission

For our initial attempt:

fn solve<R: BufRead, W: Write>(mut input: Scanner<R>, mut w: W) {

let c: usize = input.token();

for _ in 0..c {

let song_num:u32 = input.token();

let mut playlist = Playlist::new();

for _ in 0..song_num {

let genre:String = input.token();

let writer:String = input.token();

playlist.add(&genre, &writer)

}

writeln!(w,"{}", song_num - playlist.longest_path());

}

}

struct Song {

id: u32,

genre:u32,

writer:u32,

}

struct Playlist {

link: HashMap<u32,Vec<u32>>,

songs:Vec<Song>,

genres: HashMap<String,u32>,

writers: HashMap<String,u32>,

next_id: u32,

}

impl Playlist {

fn new() -> Self {

Playlist {

link: HashMap::new(),

songs: Vec::new(),

genres: HashMap::new(),

writers: HashMap::new(),

next_id:0

}

}

fn mapping(map: &mut HashMap<String, u32>, s: &str) -> u32 {

if !map.contains_key(s) {

let new_id = map.len() as u32;

map.insert(s.to_string(), new_id);

new_id

} else {

*map.get(s).unwrap()

}

}

fn add(&mut self,genre:&str,writer:&str){

let genre = Self::mapping(&mut self.genres, genre);

let writer = Self::mapping(&mut self.writers, writer);

let id = self.next_id;

self.next_id += 1;

let new_song = Song{id,genre,writer};

for song in &self.songs {

if song.genre == new_song.genre || song.writer == new_song.writer {

self.link.entry(song.id).or_insert_with(Vec::new).push(new_song.id);

self.link.entry(new_song.id).or_insert_with(Vec::new).push(song.id);

}

};

self.songs.push(new_song);

}

fn dfs(&self, song: u32, visited: &mut HashSet<u32>, length: &mut u32, max_length: &mut u32) {

visited.insert(song);

*length += 1;

if *length > *max_length {

*max_length = *length;

}

if let Some(neighbors) = self.link.get(&song) {

for &neighbor in neighbors {

if !visited.contains(&neighbor) {

self.dfs(neighbor, visited, length, max_length);

}

}

}

visited.remove(&song);

*length -= 1;

}

fn longest_path(&self) -> u32 {

let mut max_length = 0;

for song in &self.songs {

let mut visited = HashSet::new();

let mut length = 0;

self.dfs(song.id, &mut visited, &mut length, &mut max_length);

}

max_length

}

}

We label our graph Playlist. Upon encountering a new song, we employ a self-incrementing map to convert the String into a u32, which helps expedite comparisons. Next, we add the new song to a list, combing through it to find songs that can connect, thereby establishing a bidirectional link.

Once the mapping is complete, we can utilize DFS (Depth-First Search) to navigate through each link in pursuit of the longest path.

Regrettably, this straightforward approach did not suffice, as we encountered a timeout error on Test 9.

Second Submission

The root cause of our failure was the presence of numerous identical songs in the playlist, each linked to a multitude of possible paths, making it impractically time-consuming to explore every pathway and backtrack.

A straightforward solution to this dilemma is to consolidate identical songs. If two songs are the same, their links would be identical as well; thus, merging them causes no harm. Moreover, when tallying lengths, the amalgamated node makes a more significant contribution.

Here's our revised strategy:

struct Playlist {

link: HashMap<(u32, u32), Vec<(u32, u32)>>,

songs: HashMap<(u32, u32), u32>, // store weight

genres: HashMap<String, u32>,

writers: HashMap<String, u32>,

}

impl Playlist {

fn add(&mut self, genre: &str, writer: &str) {

let genre = Self::mapping(&mut self.genres, genre);

let writer = Self::mapping(&mut self.writers, writer);

let new_song = (genre, writer);

let is_new_song = !self.songs.contains_key(&new_song);

let weight = self.songs.entry(new_song).or_insert(0);

*weight += 1;

if is_new_song {

// if new song, init links

self.link.entry(new_song).or_insert_with(Vec::new);

for (&old_song, _) in self.songs.iter() {

if old_song != new_song && (old_song.0 == genre || old_song.1 == writer) {

// create two way links

self.link.get_mut(&new_song).unwrap().push(old_song);

self.link.get_mut(&old_song).unwrap().push(new_song);

}

}

}

}

fn dfs(

&self,

song: &(u32, u32),

visited: &mut HashSet<(u32, u32)>,

length: &mut u32,

max_length: &mut u32,

) {

visited.insert(*song);

let current_weight = self.songs.get(song).unwrap();

*length += current_weight;

*max_length = max(*max_length, *length);

if let Some(neighbors) = self.link.get(&song) {

for &neighbor in neighbors {

if !visited.contains(&neighbor) {

self.dfs(&neighbor, visited, length, max_length);

}

}

}

*length -= current_weight;

visited.remove(&song);

}

fn longest_path(&self) -> u32 {

let mut max_length = 0;

for song in self.link.keys() {

let mut visited = HashSet::new();

let mut length = 0;

self.dfs(song, &mut visited, &mut length, &mut max_length);

}

max_length

}

}

This time, we opted to eliminate the id field for songs, instead using (genre, writer) as the identifier. Additionally, within the songs list, we employ a HashMap to keep track of the weights of each song. When introducing a new song, it's compared with existing ones and merged if an identical song already exists. In DFS, when visiting a merged song, we augment the length with its weight.

Yet, we encountered an error in Test 9 again.

Third submission

Upon re-evaluating our logic, I realized that a merged node offers more than just additional length—it also increases visibility.

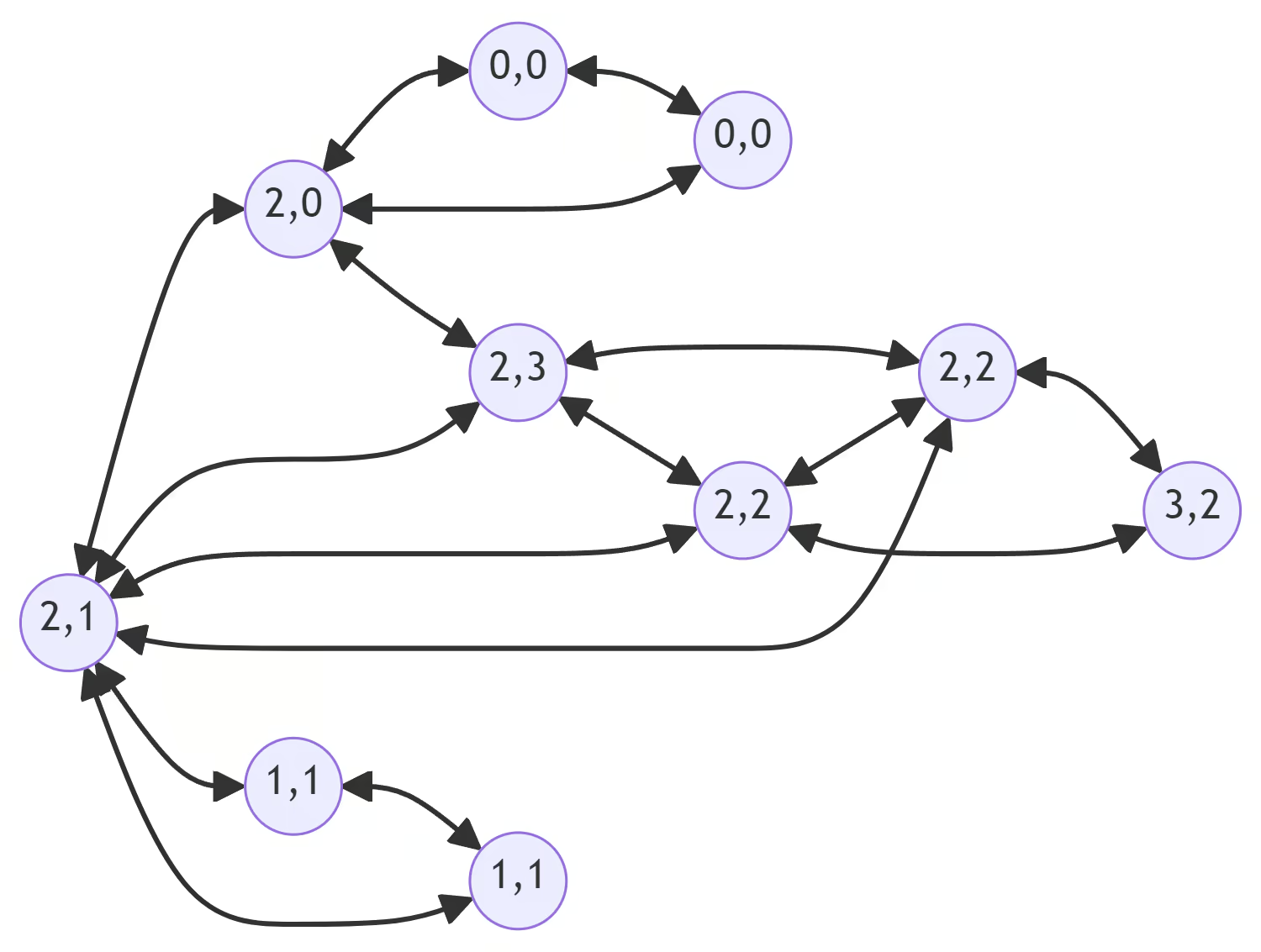

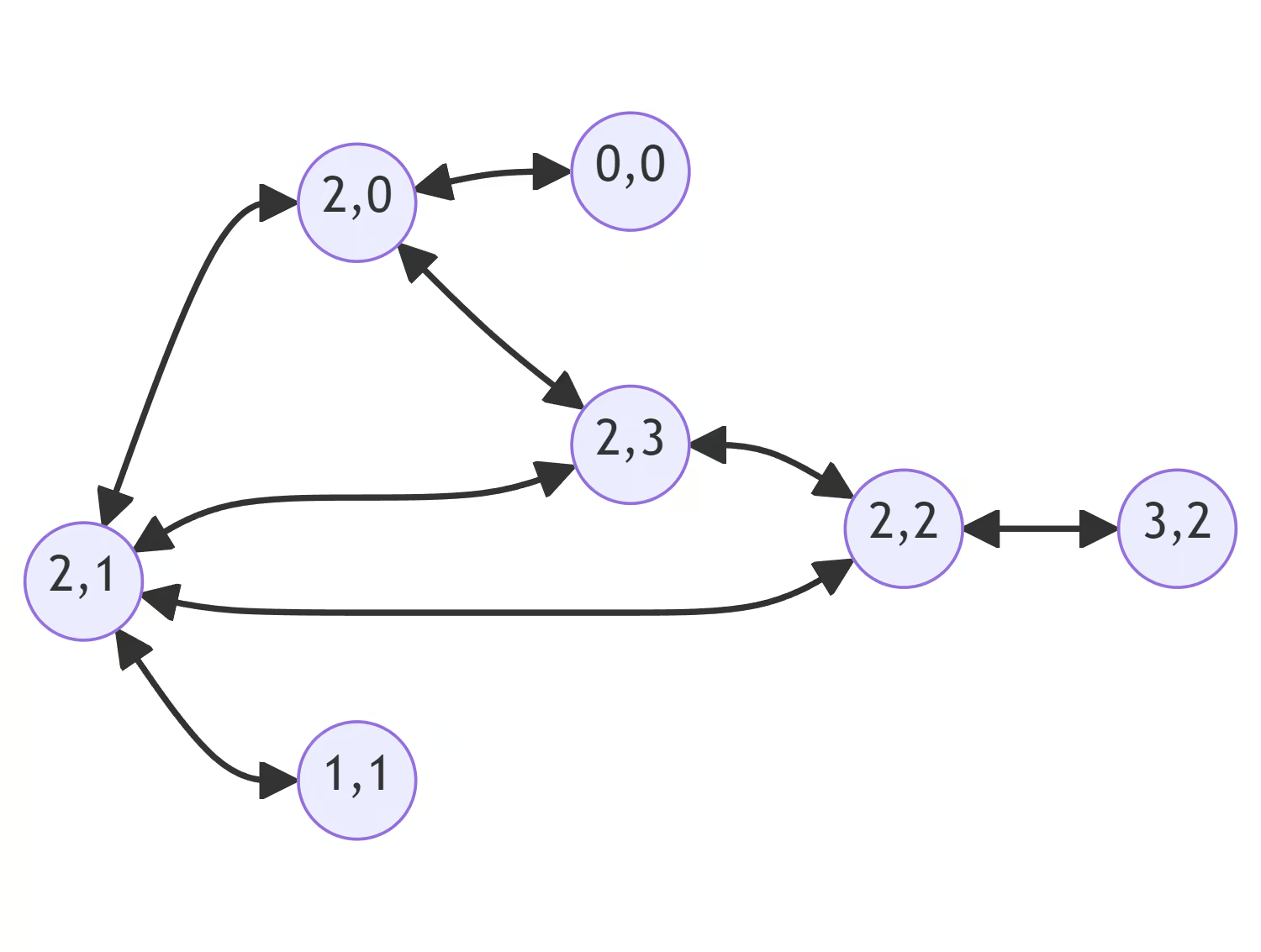

Consider two graphs: the original and one with merged same nodes. In the original graph, node (2,2) could move to (3,2) and then return via a different (2,2) node. However, after merging same nodes, once we move to (3,2), returning is not an option because (2,2) would be marked as visited.

Therefore, the solution becomes evident: we should treat the weight of each node as its accessibility. A merged node like (2,2) should be visitable twice.

fn dfs(

&self,

song: &(u32, u32),

visited: &mut HashMap<(u32, u32), u32>,

length: &mut u32,

max_length: &mut u32,

) {

let visit_count = visited.entry(*song).or_insert(0);

// if visited count enough, just pass

if *visit_count >= *self.songs.get(song).unwrap() {

return;

}

// if first time visit, add length

if *visit_count == 0 {

*length += self.songs.get(song).unwrap();

*max_length = std::cmp::max(*max_length, *length);

}

*visit_count += 1;

// visit neighbors

if let Some(neighbors) = self.link.get(&song) {

for &neighbor in neighbors {

self.dfs(&neighbor, visited, length, max_length);

}

}

// back

let visit_count = visited.entry(*song).or_insert(0);

*visit_count -= 1;

if *visit_count == 0 {

*length -= *self.songs.get(song).unwrap();

}

}

We slightly modified the visited list so that it's now a HashMap. This not only tracks whether a node has been visited but also how many times it has been visited. Only the first visit contributes weight to the length; subsequent visits simply pass through without action. If a node has been visited a number of times equal to its weight, it should no longer be passable.

This adjustment allowed us to overcome Test 9. However, we then faced a timeout error on Test 55.

Fourth Submission

This time, the test case revealed that all nodes ending with "d" were interconnected, resulting in an excessive number of links.

1

16

z z

a d

a y

b d

a x

c d

d d

e d

f d

g d

h d

i d

j d

k d

l d

m d

At that point, I recognized that my approach might have been misguided and somewhat naive. Yet, after investing several hours into it, I was determined to persist.

Given the plethora of identical nodes interconnected, it was crucial to devise a strategy for their identification and consolidation to streamline our graph.

We observed that two nodes are equivalent if they connect to the exact same nodes, with the exception of each other. Hence, we could merge these nodes.

We introduced a pre-processing step to identify and merge all equivalent nodes.

fn merge_equivalence(&mut self) {

let mut to_merge = Vec::new();

let mut checked_pairs: HashSet<((u32, u32), (u32, u32))> = HashSet::new();

// loop links

for (song_a, neighbors_a) in &self.links {

let mut neighbors_set_a: HashSet<_> = neighbors_a.iter().cloned().collect();

// for each song, check its neighbors

for song_b in neighbors_a {

if checked_pairs.contains(&(song_a.clone(), song_b.clone()))

|| checked_pairs.contains(&(song_b.clone(), song_a.clone()))

{

continue;

}

neighbors_set_a.remove(song_b); // remove a from b

let neighbors_b = self.links.get(song_b).unwrap_or(&vec![]).clone();

let mut neighbors_set_b: HashSet<_> = neighbors_b.iter().cloned().collect();

neighbors_set_b.remove(song_a); // remove b from a

if neighbors_set_a == neighbors_set_b {

to_merge.push((song_a.clone(), song_b.clone()));

}

neighbors_set_a.insert(song_b.clone());

checked_pairs.insert((song_a.clone(), song_b.clone()));

}

}

for pair in to_merge {

self.merge(pair.0.clone(), pair.1.clone());

}

}

This involved examining each node, assessing its neighbors, excluding mutual connections, and then determining equivalence. Subsequently, we combined the weights of two equivalent nodes, eliminated one, and updated the linkage.

fn merge(&mut self, song_a: (u32, u32), song_b: (u32, u32)) {

let weight_a = *self.songs.get(&song_a).unwrap_or(&0);

let weight_b = *self.songs.get(&song_b).unwrap_or(&0);

self.songs.insert(song_a.clone(), weight_a + weight_b);

self.songs.remove(&song_b);

self.links.remove(&song_b);

for neighbors in self.links.values_mut(){

if let Some(pos) = neighbors.iter().position(|x| *x== song_b) {

neighbors.remove(pos);

if !neighbors.contains(&song_a) {

neighbors.push(song_a.clone());

}

}

}

}

This pre-processing enabled us to tidy the graph before DFS without disrupting its linkages.

Ultimately, our solution was accepted, albeit as the slowest...

On the bright side, not only did my solution compute the longest path, but it actually obtained the playlist, thereby exceeding the task's requirements. Moreover, my program seems to have used the least memory compared to other users' dynamic programming (DP) approaches.